In today’s climate of rapid technological advancement, it seems we’ve entered an era where being human may no longer be a prerequisite for securing employment. The alarming emergence of sophisticated AI-driven deception in recruitment has taken the corporate world by storm, as hackers and scammers deploy deepfake technology to masquerade as qualified applicants. The recent experience of Pindrop Security serves as a potent warning: the sophisticated nature of these fraudulent operations could redefine our entire hiring landscape.

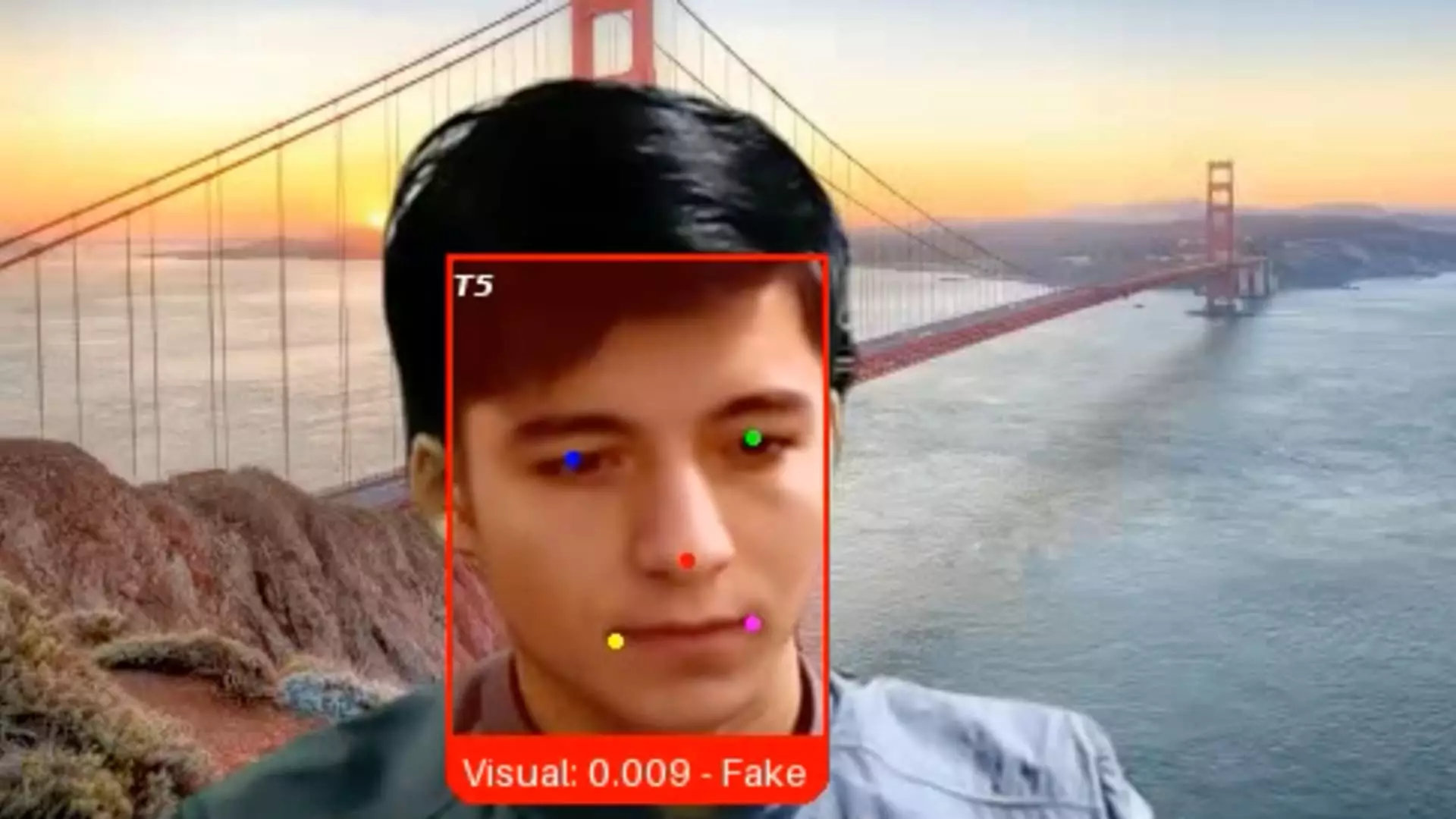

When Pindrop conducted an interview with a candidate dubbed “Ivan X,” they discovered that this seemingly qualified individual was nothing more than a digital smokescreen, powered by generative AI. The implications of such deception raise significant questions about identity verification and the trustworthiness of hiring processes. The technology designed to optimize recruitment may instead open the door to a host of security risks that companies must now grapple with. Pindrop’s CEO, Vijay Balasubramaniyan, encapsulated the crux of the issue: “Gen AI has blurred the line between what it is to be human and what it means to be machine.” This is the uncomfortable truth that corporations need to confront head-on.

The Plague of Artificial Candidates

Research from Gartner predicts a staggering reality in the job market by 2028: one in four job candidates may be nothing but a false persona equipped with fabricated resumes, photo IDs, and phony employment histories. This is not just an inconvenience, but rather a significant threat that can result in severe reputational and financial risks for companies. An impostor in your workplace can do more than collect an undeserved paycheck; they can potentially install malware, steal customer data, or disrupt business operations altogether.

Unfortunately, many organizations are oblivious to the growing threats posed by AI-enhanced fraudsters. According to industry expert Ben Sesser, the hiring process has become a hotspot for exploitation due to its inherently human nature. Managers tend to believe their traditional measures of vetting candidates are sufficient, but they can overlook the complexities introduced by new technologies. The result? An influx of “work-from-home” positions becomes a goldmine for scammers, leading to an alarming uptick in fraudulent applications.

Corporate Vigilance: The Need for Robust Verification Systems

To protect against this rising tide of deception, companies must adopt more stringent verification measures. Delving into the qualifications of applicants is no longer enough; organizations must proactively invest in identity verification technologies. The ongoing infiltration of remote workers with dubious backgrounds demands a diversification of hiring safeguards that root out fake profiles. Firms like iDenfy and Jumio are emerging to fill this essential gap, yet there remains a plethora of companies unaware of the pervasive counterfeit threats lurking in shadowy corners.

The case of Lili Infante’s startup, CAT Labs, exemplifies the real-world ramifications of ineffective checks. With suspicious applications flooding in and the pressing need to fill critical roles, companies face an inevitable dilemma: How to discern worthy talent from fraudulent entities? The stark realization is that many fraudsters possess qualifications often found in top-tier candidates, which raises the fear that some organizations might overlook red flags due to the apparent expertise displayed.

Global Implications and National Security Risks

The reach of fraudulent job candidates extends beyond corporate America; it has quickly morphed into a national security concern. Allegations emerged recently indicating that more than 300 U.S. companies have unwittingly employed impostors linked to North Korean cyber-criminal activity. The Justice Department exposed a disturbing trend wherein suspected operatives leveraged stolen American identities to secure remote jobs, ultimately sending millions of dollars back to fund the regime’s weapons efforts. The gravity of the situation emphasizes that hiring managers must not only consider the well-being of their organizations but also their responsibility to national security.

Looking ahead, there is little doubt that the sophistication of deepfakes and other AI technologies will only improve, making the challenge of detecting fraud increasingly vexatious. As Balasubramaniyan himself noted, “We are no longer able to trust our eyes and ears.” If businesses fail to adapt and enforce technologies that can authenticate the true identities of applicants, they risk permeating their workforce with malicious impostors disguised as competent employees.

As the corporate world continues to embrace remote working, the specter of AI-driven employment fraud looms ominously. Organizations must act now, ensuring that rigorous identity verification becomes an integral part of the hiring process. Without vigilance, the legitimate workforce could soon find itself overshadowed by a shadowy realm of deceit and manipulation, ushering in a new age of uncertainty for employers and employees alike.